Discover

Visualize rapid, validated insights through real-world data.

Datavant Completes Acquisition of Aetion. Learn more →

While analyses of non-randomized health care databases—insurance claims, electronic health records (EHRs), and more—can yield robust real-world evidence (RWE), reporting inconsistencies and convoluted prose sometimes hamstring data review and high-stakes medical and regulatory decision-making.

To boost clarity and confidence in RWE reporting, researchers have proposed a new graphical design standard that translates longitudinal health care database study parameters, including key temporal anchors in cohorts, into a common visual language and framework.

Created by a multi-stakeholder team including Shirley Wang, Ph.D., M.Sc., and Aetion co-founders Jeremy Rassen, Sc.D., and Sebastian Schneeweiss, M.D., Sc.D., the standardized structure and terminology of the graphical reporting template are designed to simplify study review by health care decision-makers and streamline study reproducibility—a critical driver of RWE acceptance. Early feedback on the template has been positive, with several organizations adopting it for database reporting purposes.

We spoke with Dr. Wang, assistant professor of medicine at Harvard Medical School, associate epidemiologist in the Division of Pharmacoepidemiology and Pharmacoeconomics at Brigham and Women’s Hospital (BWH), and co-director of the REPEAT initiative, which uses the Aetion Evidence Platform® to replicate the results of large health care database studies, about the implications of this graphical reporting template for improving RWE clarity, reproducibility and confidence.

Dr. Wang’s responses have been edited for clarity and length.

Q: How can a graphical visualization framework advance reproducibility and assessment of validity of health care database analyses?

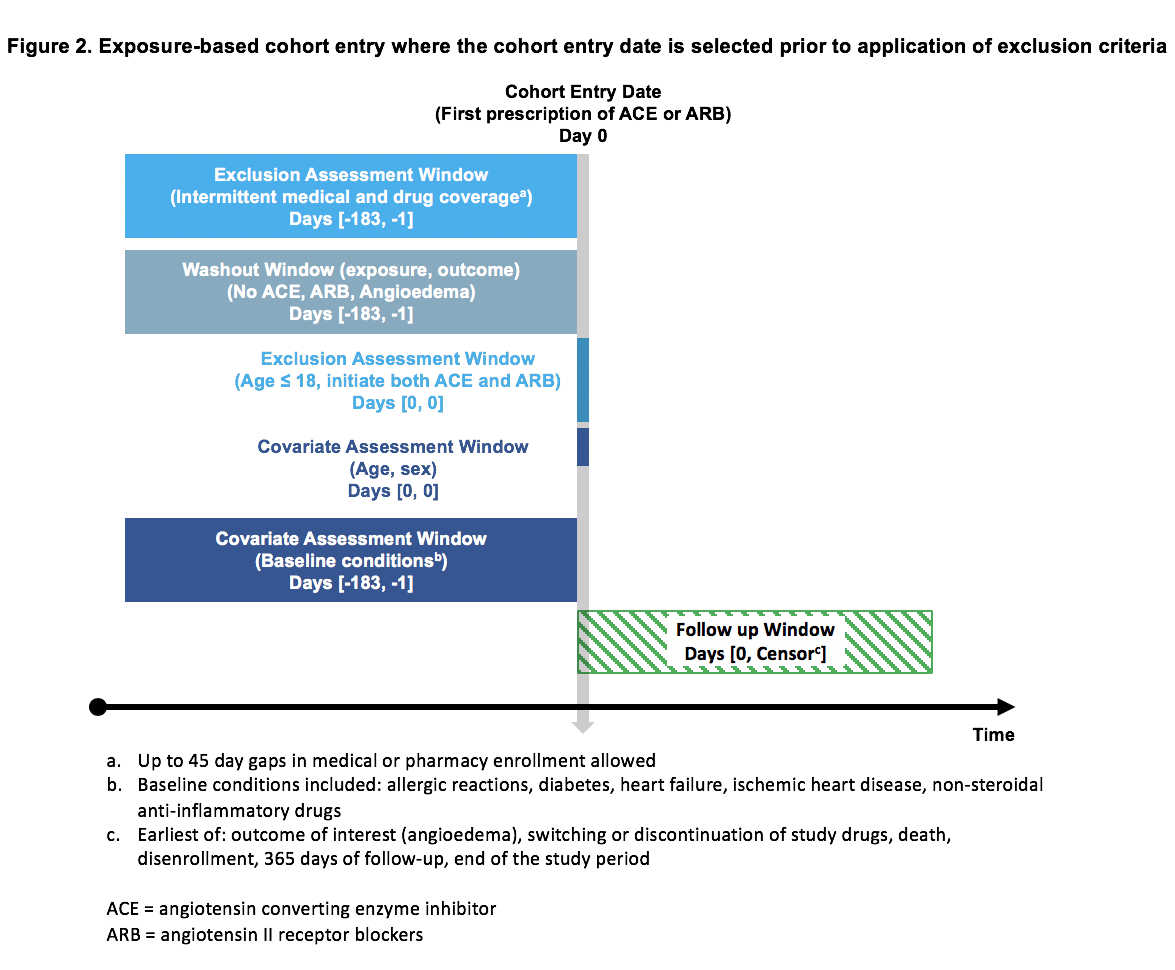

A: The graphical framework can advance reproducibility by increasing clarity of communication about a study’s implementation, which makes the study easier to reproduce. Clarity about what was done is a necessary precondition for assessing validity. Many validity issues arise from problems in defining temporality when creating analytic cohorts. The design diagram makes temporality unambiguous. In the design template below, you see temporality both visually in the bars as well as numerically, via the standard mathematical notation of days in inclusive brackets. Seeing temporality in the diagram makes it easier to both assess study validity and to reproduce it.

Q: What are the implications of this visual design standard for health care database analyses and RWE?

A: Ideally, the design diagram would be the default Figure 1 for papers and reports, just as we have a default Table 1 almost automatically for every paper to describe baseline patient characteristics. Also, RWE stakeholders have told us that they see the value of the design diagram in improved communication, either externally between researchers, sponsors, and decision-makers, or internally between multidisciplinary teams. This clarity in communication would result in fewer assumptions across teams, groups, or subdisciplines that use different language to describe study elements. Ultimately, this improves confidence in RWE.

Q: Have you expanded or modified the framework since its publication in 2017?

A: Other than minor fine-tuning, no. However, we’ve begun working with the Food and Drug Administration (FDA) and a consortium of sponsors to develop a structured template and reporting tool for RWE (STaRT-RWE) that incorporates design visualization. That template is comprised of the design diagram and a series of parameter tables and appendices. ISPE—the International Society for Pharmacoepidemiology—also recently funded a proposal to develop a manuscript which will allow us to further explore the use of visual applications in pharmacoepidemiology.

Additionally, focus groups evaluating the efficiency, usability and value of the structured reporting template have been very enthusiastic. Many are adapting and incorporating the design diagram into their processes to facilitate communication.

Q: What was the model or inspiration for the graphical framework?

A: Following the ISPE-ISPOR Joint Task Force paper on “Reporting to improve reproducibility and facilitate validity assessment for health care database studies V1.0,” we decided to hone in on a common visual language for study design. Much of the design framework is derived from the Harvard T.H. Chan School of Public Health’s educational study design materials. The biggest challenge was determining the terminology and amount of supplemental text to include in the figure, versus simplifying and relying on visual markers. We tried to ‘pressure test’ the design by developing templates for several types of study designs—not only the cohort design depicted earlier, but also core sampling approaches like method case-control and self-controlled designs.

Q: When envisioning a visual design approach for RWE, what were the challenges of translating temporality into graphical form?

A: The design diagram challenges users to consider the relationship of windows of assessment to a primary anchor, such as the cohort entry date, and to think more deeply about measurements. However, some database studies that use multiple, complex time windows could result in more visually complicated diagrams.

Q: Can you define the connection between the visual framework and study reproducibility?

A: A visual framework is one strategy to facilitate unambiguous communication about RWE generation. When there isn’t ambiguity, we have the ability to reproduce studies and evaluate validity because we understand what was done. In my team’s efforts to replicate large health care database studies, if the papers we were trying to replicate had provided design diagrams, our work would have been much easier. Often, when struggling to understand a study’s implementation, we would attempt to diagram it to piece together what was described in different parts of the methods section. In that sense, the visual framework and reproducibility are closely related. Had there been a standard visual framework for communicating study design, we wouldn’t have struggled so much with replication of published studies.

Q: Collaborators in the recent Friends of Cancer Research initiative, Pilot 2.0, examined key questions in oncology through RWE and identified several reporting challenges, including data quality and “missingness” as well as application of measures across multiple data sources. Can a visualization framework alleviate these concerns or reduce barriers to RWE acceptance?

A: Design diagrams can help to alleviate concerns regarding study design, but cannot solve concerns about data quality, missingness, and heterogeneity in the underlying data. The design diagram helps with that, but you need more, which is why we’re developing STaRT-RWE, the structured template and reporting tool for RWE, with the FDA.

Q: Any suggestions for simplifying the convoluted prose sometimes found in studies’ methods sections?

A: The design diagram is great at providing a high-level conceptual summary. The STaRT-RWE reporting template proposes a series of tables that deliver key details at a granular level, with a minimum of prose. That could exist as an appendix or be included in the manuscript. But something like a good summary study parameter table that provides more detail than the visualization would be needed. Those two in conjunction could help to simplify convoluted prose.

Q: What are the next steps for the visualization framework?

A: Establishing transparent and reproducible evidence is the foundation. Once we achieve that first step, we want to evaluate robustness and transportability of evidence to different populations or data sources. Then, if we have achieved clarity of reporting, we could compare apples to apples and understand differences in implementation and how that could play out in differences in results for studies that seem to ask the same questions.

Down the road, next steps could include creating more incentives for and demonstrating the value of removing barriers to reporting, as well as clarifying the process of RWE generation. I’m part of an RWE Transparency Initiative led by the the Professional Society for Health Economics and Outcomes Research (ISPOR), ISPE, Duke-Margolis, and the National Pharmaceutical Council. We are working toward the creation of a centralized, study registration process for hypothesis-evaluating RWE. At this point, the registration process is based on structured reporting tables with design visualization, but we are evaluating additional components such as lock-box capacity to embargo registration data for a set number of years. In the meantime, I am working with a graduate student who is developing a Shiny app with “click-and-drag” functionality for creating design figures within the visualization framework.

Others interested may download and adapt design diagram figures and templates free of charge, with appropriate attribution.